Never imagine yourself not to be otherwise than what it might appear to others that what you were or might have been was not otherwise than what you had been would have appeared to them to be otherwise.

———Lewis Carroll, Alice in Wonderland

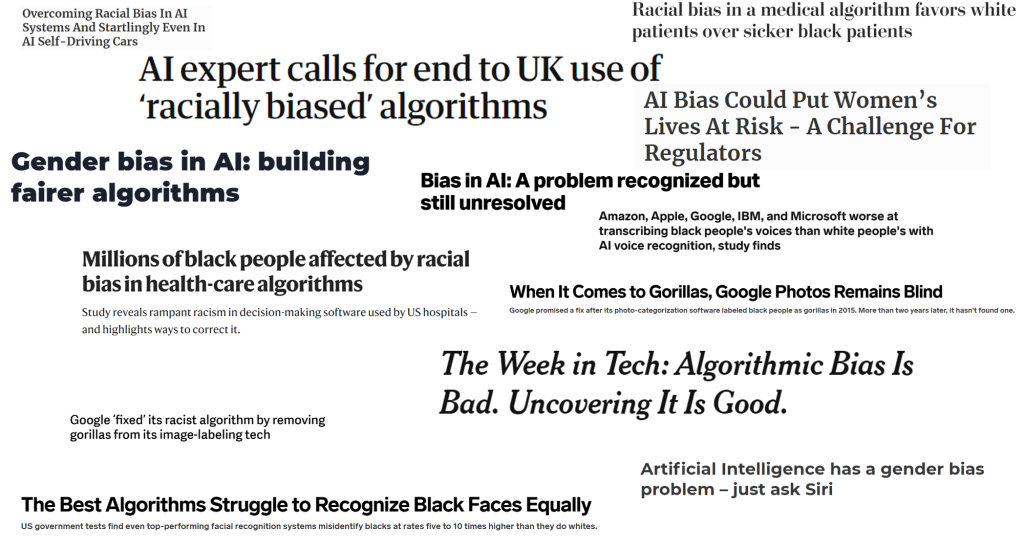

Algorithmic bias refers to the deviation of information from the neutral and objective value position in production, integration, and push, which makes relevant information go against the facts or be unfairly disseminated, and ultimately affects the public’s decision on information. The algorithm bias is mainly influenced by three factors: the value drive of traffic priority, the incorrect reading of personalized user profiles, and the structural bias of the original database.

The lives of the public are transformed into data, and the extracted information is packaged into capitalist enterprises and resold to the public (Nick Couldry and Ulises A. Mejias, 2019). To improve user retention, the platform will use algorithms to push content that users may be interested in. Algorithms give platforms more weight to push content to users that interests them. The choice of browsing content is changed from the user to the algorithm and then to the capital and power organization, and the information cocoon is strengthened in this process.(Peng, H. and Liu, C., 2021.)If the deliberate push logic will produce algorithmic bias, then the database pollution will also lead to the birth of algorithmic bias. Racism is reinforced by algorithms, further exacerbating social contradictions (Noble, SU 2018).

The database behind almost every machine learning algorithm is biased. As manual marking services have become a quintessential business model, many tech companies are outsourcing their vast amounts of data for tagging. This means that algorithmic biases are being propagated and amplified through a process of invisibility and legitimization.

As a by-product of algorithm development, algorithm bias reflects the importance of data integrity to algorithms. We might argue that algorithmic bias is inevitable because the spread of disinformation in a democratic society pollutes the overall database (Bennett, W. L., & Livingston, S., 2018). From the public’s point of view, these algorithms may better help our lives – they know exactly what we want and don’t want, but in the process, personal information is leaked and controlled by algorithms.

By the way, when you read this blog, please consider whether you are affected by algorithmic bias?

Reference

Bennett, W. L., & Livingston, S. (2018). The disinformation order: Disruptive communication and the decline of democratic institutions. European Journal of Communication, 33(2), 122-139. https://doi-org.uow.idm.oclc.org/10.1177/0267323118760317

Nick Couldry and Ulises A. Mejias (2019) The Costs of Connection : How Data Is Colonizing Human Life and Appropriating It for Capitalism. Stanford, California: Stanford University Press (Culture and Economic Life). Available at: https://search-ebscohost-com.uow.idm.oclc.org/login.aspx?direct=true&db=nlebk&AN=2160834&site=ehost-live&scope=site (Accessed: 28 November 2024).

Noble, SU 2018, Algorithms of Oppression : How Search Engines Reinforce Racism, New York University Press, New York. Available from: ProQuest Ebook Central. [28 November 2024].

Peng, H. and Liu, C., 2021. Breaking the information cocoon: when do people actively seek conflicting information?. Proceedings of the Association for Information Science and Technology, 58(1), pp.801-803.